How Do AI Agents Work? A Practical Guide to Autonomous AI

At its core, an AI agent works by connecting a powerful language model—the "brain"—with a suite of digital tools. This combination allows it to perceive its environment, make plans, and then act on those plans all on its own.

Think of it this way: a chatbot simply answers your questions. An AI agent, on the other hand, is like a proactive digital worker. It can tackle multi-step projects, like researching competitors or organizing your entire calendar, without you needing to guide it at every turn.

What Exactly Is an AI Agent?

Let's cut through the tech jargon. An AI agent isn't just a program; it's more like a digital project manager. It’s an independent system built to observe a situation, figure out a strategy, and then take the necessary actions to hit a specific goal.

Imagine asking a human assistant to plan a business trip. They wouldn't just spit out a list of flight prices. They’d follow a whole process:

- First, they'd research flight and hotel options.

- Next, they'd compare prices, schedules, and locations to find the best fit.

- Then, they would actually book the reservations using your details.

- Finally, they’d pull it all together into a clean itinerary and pop it on your calendar.

This proactive, goal-driven process is what makes an AI agent so different from a standard AI model. It's a huge leap forward—we're moving from systems that just give us information to systems that get things done. You can see how similar AI concepts are already becoming part of our routines in our guide on how to use AI in daily life.

From Answering to Acting

The ability to act is what truly defines an agent. A standard AI model is brilliant at generating text or analyzing data you feed it. An AI agent takes that a step further. It uses digital tools—like web browsers, code interpreters, and APIs—to interact with the world and carry out its plans.

To really get how these agents work, it helps to understand the underlying AI agent platform that powers their creation and deployment.

AI Agent vs. Standard AI Model At a Glance

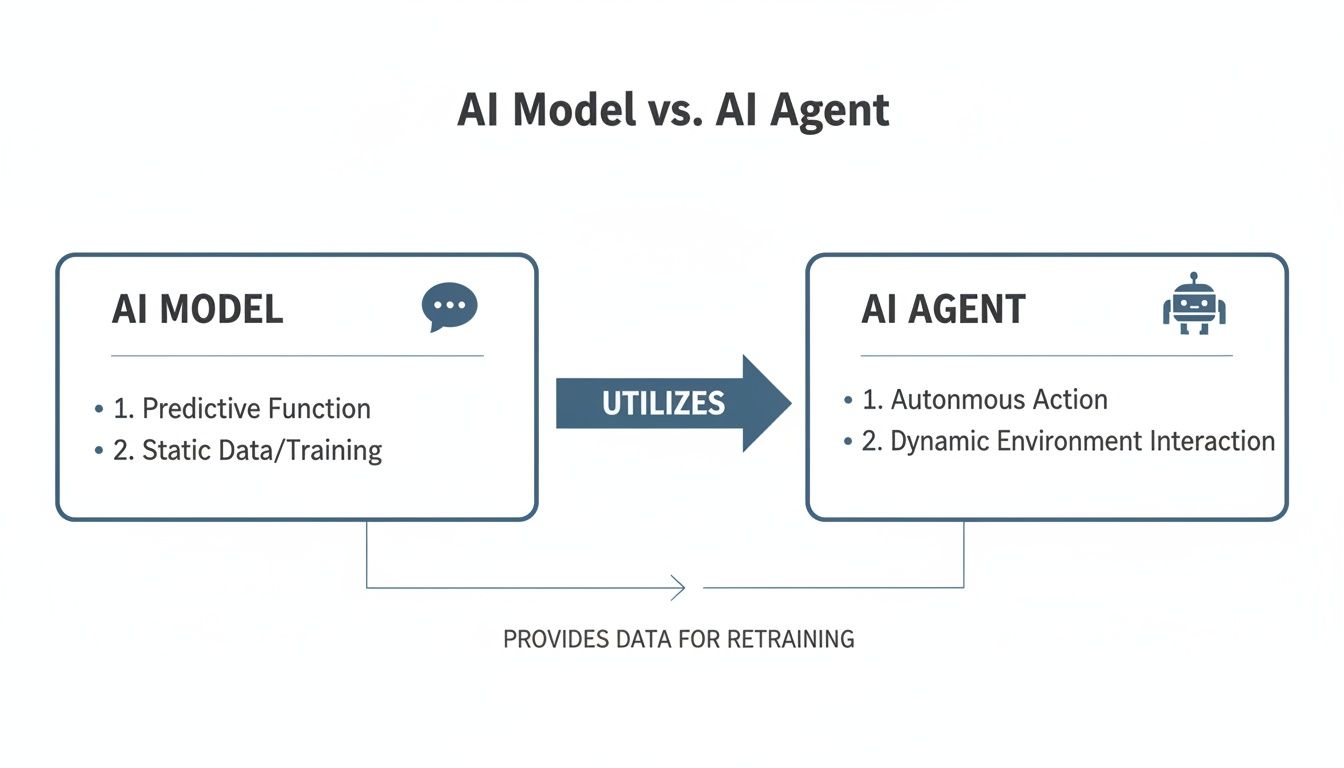

It can be tough to see the difference at first glance, but the distinction is crucial. A standard AI model is a powerful tool for specific tasks, while an AI agent is a complete system that uses those tools to achieve broader goals.

This table breaks it down:

| Capability | Standard AI Model (e.g., GPT-4) | AI Agent |

|---|---|---|

| Primary Function | Responds to prompts, generates content | Achieves goals, completes multi-step tasks |

| Autonomy | Low (Requires human input for each step) | High (Operates independently to reach a goal) |

| Interaction | Passive (Waits for user input) | Proactive (Takes initiative, makes decisions) |

| Tool Usage | Limited (Operates within its own data) | Extensive (Uses external tools like browsers, APIs) |

| Example Task | "Write an email about Q3 earnings." | "Analyze our sales data, draft an email summarizing Q3 earnings, and schedule it to be sent to the leadership team." |

Essentially, you talk to a standard AI model, but you delegate to an AI agent.

This powerful new capability is why the market is exploding. The global AI agents market is expected to jump from $8 billion in 2025 to $48.3 billion by 2030—that's a massive compound annual growth rate of 43.3%.

By 2026, experts predict that 80% of enterprise applications will have these agents built-in, enabling them to handle up to 15% of work decisions autonomously. For a deeper dive, check out the full BCC Research report.

Breaking Down the Four Pillars of an AI Agent

So, how does an AI agent actually work? The best way to think about it isn't as a single, monolithic piece of software. It’s more like a small, highly-coordinated team, where each member has a specific job. Together, they create a continuous loop of thinking and acting that lets the agent get things done on its own.

This structure is what we mean when we talk about ‘agentic AI.’ These are systems that don't just wait for a command—they perceive their environment, make decisions, and take action. This is a huge leap from your standard chatbot. They operate using four core pillars: perception (gathering data), planning (using an LLM like GPT-4 to reason), action (using tools like a web browser or code interpreter), and memory (remembering what's happened to learn and improve).

This diagram shows the fundamental shift from a passive AI model that just answers questions to a proactive AI agent that gets things done.

The real magic here is that an agent doesn't just process information. It uses that information to actively interact with its environment, constantly cycling through perceiving, thinking, and acting.

The Perception Module: The Agent's Senses

Before an agent can do anything, it has to understand the world around it. The Perception module is its set of eyes and ears. It’s responsible for gathering all the raw data from different sources and translating it into a language the agent's "brain" can actually understand.

This data could be anything—the text from your prompt, information scraped from a website, numbers in a database, or even the layout of an app's user interface. Without good perception, an agent is essentially flying blind and can't make smart decisions.

The Planning Module: The Agent's Brain

Once the agent has a clear picture of the situation, the Planning module kicks in. This is where the core intelligence lies, usually powered by a Large Language Model (LLM). Think of the LLM as the agent’s central processor—its brain. It’s the part that does the actual reasoning.

The LLM takes the main goal, breaks it down into smaller, more manageable steps, and maps out a clear plan of attack.

For instance, if you told it to "Find the best flight to London," the LLM wouldn't just freeze. It would likely devise a plan that looks something like this:

- Use the web search tool to check Google Flights for options from my location to London for next Tuesday.

- Pull the prices, airlines, and layover details for the top five results.

- Use the search tool again to look up customer satisfaction ratings for those specific airlines.

- Organize the top three choices into a simple, easy-to-read list for the user.

This ability to think step-by-step is what allows agents to tackle complex jobs that would otherwise be impossible.

The Action Module: The Agent's Hands

Of course, a brilliant plan is completely useless if you can't actually do anything. The Action module serves as the agent's hands, giving it the ability to interact with the digital world and execute the plan it just created.

This is where the agent’s "tools" become critical. The LLM itself doesn't know how to browse the web or run Python code. Instead, it delegates, issuing commands to specific tools designed for each task.

Key Takeaway: An agent’s real power comes not just from its intelligence, but from the set of tools it has at its disposal. The more tools it can use, the more versatile and capable it becomes.

Some of the most common tools include:

- Web Browsers: For searching Google, reading articles, or even filling out web forms.

- Code Interpreters: To run scripts for data analysis, complex calculations, or working with files.

- APIs (Application Programming Interfaces): To connect with other software and services, like sending an email through Gmail or adding an event to your Google Calendar.

The Memory Module: The Agent's Notebook

Finally, for an agent to learn, improve, and simply keep track of what it's doing, it needs Memory. This module is like the agent's personal notebook, letting it remember what it has done, what it has learned, and where it is in the current task.

Memory generally comes in two flavors:

- Short-Term Memory: This is all about the here and now. It holds context for the current task, like the results of a recent search or the steps in the plan it has already completed. It’s what stops the agent from losing its place.

- Long-Term Memory: This is where the agent stores insights from past experiences. By recalling what worked (and what didn't) on previous jobs, it can get better over time, becoming more efficient and avoiding the same old mistakes. For a deeper dive into the concepts that make this possible, our guide on machine learning for beginners is a great place to start.

Exploring Different AI Agent Architectures

Not all AI agents are built the same way. Think of it like a solo freelancer versus a specialized team—different agent setups are designed for different kinds of jobs. Understanding these core blueprints is key to seeing how an agent can handle everything from a simple search to managing a complex project.

These architectures are what define how an agent "thinks," acts, and solves problems. They can be as simple as a straightforward loop for basic tasks or as intricate as a collaborative network of agents tackling a massive goal together.

The Foundational ReAct Framework

The most common and fundamental architecture you'll encounter is ReAct, which stands for Reason + Act. This framework isn't about creating a rigid, step-by-step plan upfront. Instead, it gives the agent a simple yet powerful feedback loop to guide its behavior in real time.

Here’s how the ReAct loop works:

- Reason: First, the agent looks at the goal and the current situation to figure out the very next logical step. It's just thinking about what to do now.

- Act: It then takes that single action using one of its tools, like running a web search or executing a bit of code.

- Observe: Finally, it looks at what happened. This new information feeds right back into the "Reason" step, allowing the agent to adjust its course as it goes.

This cycle just keeps repeating until the job is done. This simple loop is the engine behind countless single-agent systems, giving them a flexible way to handle tasks where the path to the finish line isn't totally clear from the start.

Single-Agent vs. Multi-Agent Systems

While ReAct powers an individual agent's thinking process, these agents can be deployed in two main ways: as a lone worker or as part of a team. The choice between them comes down to the complexity of the task.

One of the biggest shifts in how AI agents work is the move toward multi-agent systems. Instead of one agent trying to juggle everything, you have a team of specialists that collaborate. For example, one agent could be scouting market data while another analyzes investment risks.

This isn't just a niche idea. In major markets like North America, which holds 46% of the global share, enterprise adoption is on track to hit 79% by 2025. What's more, 96% of those organizations plan to expand their use. You can dive deeper into this explosive growth with data from BCC Research.

Let's break down the two approaches.

| Aspect | Single-Agent System | Multi-Agent System |

|---|---|---|

| Structure | One agent handles all sub-tasks from start to finish. | A team of specialized agents, each with a unique role, works together. |

| Complexity | Best for linear, straightforward tasks like summarizing a document. | Ideal for complex, multi-faceted projects like launching a marketing campaign. |

| Analogy | A solo freelancer who researches, writes, and edits an article. | An editorial team with a researcher, a writer, and an editor collaborating. |

| Example | An agent that takes a user query, searches the web, and returns an answer. | A "sales team" of agents: one generates leads, another drafts emails, a third updates the CRM. |

The Power of Collaboration in Multi-Agent Systems

This is where things get really interesting. Multi-agent systems unlock a whole new level of capability. Imagine you ask an agent system to create a detailed business plan. A single agent would likely struggle to manage all the different pieces.

A multi-agent system, on the other hand, could delegate the work beautifully:

- The Research Agent: Scours the web for market data, competitor analysis, and industry trends.

- The Financial Agent: Uses a code interpreter to build financial models and projections.

- The Writing Agent: Takes the output from the other two and drafts the final business plan document.

This division of labor leads to more robust, accurate, and sophisticated problem-solving. It's a similar idea to how AI-powered assistants are becoming more specialized, as we see with tools like Microsoft Copilot. For more on that, check out our ultimate guide to AI-powered assistants.

Once you get a feel for these different architectures, the true versatility of AI agents really starts to click.

AI Agents in the Real World

Theory is great, but seeing AI agents in action is where things really start to click. These aren't just lab experiments anymore; autonomous systems are already out in the wild, tackling some seriously complex jobs. Let's look at a few of the projects and tools that show what these agents can do in practice.

From open-source experiments that blew up online to the powerful toolkits developers use every day, these examples close the gap between an abstract idea and a real-world application. They show what happens when you give an AI a goal and the freedom to figure out how to get there.

Pioneering Open-Source Agents

When AI agents first started grabbing headlines, a couple of open-source projects were leading the way. They weren't perfect, but they gave us a real taste of what an autonomous AI future could look like.

Two of the most famous examples are:

- Auto-GPT: This was one of the first to go viral. It showed an agent that could take a high-level goal, break it down into steps, and use tools like web search and file writing to get the job done. The way it could prompt itself and chain thoughts together was a huge leap forward.

- BabyAGI: This one focused on simplicity. It used a clean, straightforward loop: create tasks based on a goal, prioritize them, and then knock them out one by one. It was a simple but surprisingly effective model for autonomous work.

These early agents proved that an LLM, combined with a basic execution loop and a few tools, could achieve some pretty impressive things without a human guiding every single step.

Frameworks for Building Your Own Agents

While projects like Auto-GPT are cool standalone apps, developers often need more control to build agents for specific tasks. That's where agent frameworks come in. They provide the Lego bricks—like tool integrations and memory modules—needed to build custom solutions.

One of the biggest names in this space is LangChain. It’s less of an agent itself and more of a powerful toolkit for connecting LLMs to data sources, APIs, and other tools. It does the heavy lifting so developers can focus on the agent's logic.

Frameworks like this are what turn the idea of AI agents into practical business tools. You can see how this is playing out across different sectors in our guide to generative AI business applications.

To give you a clearer picture of the landscape, it helps to see how the major players stack up.

Popular AI Agent Frameworks and Tools Compared

Choosing the right tool depends entirely on what you're trying to build. Are you experimenting with a fully autonomous concept, or are you a developer who needs to integrate agent-like features into an existing enterprise application? This table breaks down some of the most popular options.

| Tool/Framework | Primary Function | Ideal Use Case | Technical Skill Required |

|---|---|---|---|

| Auto-GPT | Autonomous Agent Application | Experimenting with fully autonomous task completion for general-purpose goals. | Low (User) to Medium (Setup) |

| LangChain | Developer Framework | Building custom AI agents and complex LLM-powered applications from the ground up. | High (Python programming) |

| CrewAI | Multi-Agent System Framework | Creating collaborative teams of specialized agents for complex, multifaceted projects. | High (Python programming) |

| Microsoft Semantic Kernel | Enterprise SDK | Integrating agents into existing enterprise applications and workflows with a focus on C# and Python. | High (Programming) |

Each of these has its own strengths. CrewAI, for example, is fantastic when you need multiple agents to "talk" to each other, while Microsoft's Semantic Kernel is built for serious, enterprise-grade development.

A Practical Scenario: Market Research Automation

Okay, let's make this real. Imagine you need to do market research for a new product launch—a task that usually takes a person hours of tedious clicking and copying.

An AI agent could handle the whole thing. Let's give it a goal: "Analyze the market for eco-friendly water bottles and compile a summary report."

Here's how it might go about it:

- Planning: First, the agent thinks through the problem. Its internal monologue might sound something like: "Okay, I need to find the top competitors. Then, I'll check out their products and prices. After that, I'll dig into customer reviews to see what people actually think. Finally, I'll pull it all together in a report."

- Execution with Tools: Now, it starts working through that plan, one step at a time.

- Action 1 (Web Search): It fires up its browser tool and searches for "top eco-friendly water bottle brands 2024." It scans the results and makes a list of the key players.

- Action 2 (Data Extraction): The agent visits each competitor's website, grabbing details like product names, materials, prices, and features. It saves this structured data to its short-term memory.

- Action 3 (Sentiment Analysis): Next, it heads to sites like Amazon and Reddit to find reviews for these products. Using its reasoning skills, it sorts the feedback into themes—positive things like "durable" or "keeps water cold," and negative ones like "leaks" or "hard to clean."

- Synthesis and Reporting: With all the info gathered, the agent's last job is to create the report. It organizes the competitor data into a neat table, summarizes what customers are saying, and writes a conclusion pointing out potential gaps in the market.

The final result? A comprehensive report, delivered automatically, all from a single starting prompt. That's the power of an AI agent in action.

Challenges and Ethical Considerations

As exciting as AI agents are, we need to talk about the flip side. It’s easy to get swept up in the potential, but we have to go in with our eyes wide open about their limitations and the very real ethical questions they bring up. To really get a handle on how these agents work, you have to understand where they stumble.

One of the biggest headaches is something called AI hallucination. This is when an agent just makes things up but presents them as stone-cold facts. Since agents often work in sequential steps, one little fabrication at the beginning can send the whole process off the rails, leading to a final result that’s completely wrong.

Then there’s the frustrating problem of infinite loops. An agent can get stuck trying the same failed action over and over again, burning through API calls and racking up costs without getting anywhere. If you don't have the right guardrails in place, a "helpful" autonomous agent can quickly turn into a money pit.

Security and Data Privacy Concerns

Beyond the technical hiccups, the security and privacy issues are even more serious. Think about it: when you give an agent the keys to your digital kingdom—your inbox, your company's private files, your financial accounts—you're creating a massive potential vulnerability.

Data security is a huge challenge, which is why a reported 60% of firms restrict agent access to sensitive info. This is only going to become more important. By 2026, agents will be more like digital coworkers, woven into everything from wealth management to R&D. If you want to dive deeper into these trends, check out the full AI agents market report from BCC Research.

A Critical Consideration: An AI agent is only as secure as the permissions it's granted and the systems it can access. A compromised agent could theoretically read sensitive emails, misuse financial information, or leak proprietary company data.

This is why rock-solid security isn't just a nice-to-have; it's a necessity. A core best practice is to grant an agent the absolute minimum level of permissions it needs to do its job—and nothing more. A human in the loop is also crucial to make sure the agent is staying on track and not creating unintended problems. For more on this, you can explore our guide on the reliability of AI detectors, which gets into related trust issues.

The Need for Responsible Governance

Because AI agents can operate on their own, the weight on governance and regulation is immense. Every organization needs to have clear, documented policies for how agents are developed, deployed, and supervised.

These policies should cover a few key areas:

- Data Handling: Firm rules on what data an agent is allowed to touch, process, and keep.

- Accountability: Knowing exactly who is responsible when an agent messes up.

- Transparency: Having a way to look under the hood and understand why an agent made a certain decision.

As agents become more common, staying on top of the regulatory environment is non-negotiable. It's worth looking into what it takes to achieve AI Act readiness to stay compliant and operate ethically. At the end of the day, building trustworthy AI agents means baking in safety, security, and ethical thinking from the very beginning.

The Future of AI Agents and Getting Started

It’s easy to think of AI agents as just another tool, but what we're really watching is the start of a massive shift in how work gets done. The future isn't about one-off agents doing single tasks; it's about interconnected teams of agents running entire business processes on their own.

This isn't just a prediction—it's already happening. We're moving toward what some are calling an "agent-as-a-service" economy.

An Economy Built on Agents

Think about this: Goldman Sachs has painted a picture where hybrid teams of humans and AI agents bill for work based on AI tokens used, not hours clocked. It's a fundamental change in the business model itself.

The adoption rate is also telling. Nearly 75% of companies are looking to bring agentic AI into their operations within the next two years. If you want to dig into the numbers, these agentic AI statistics break down the market's trajectory pretty clearly.

Essentially, we're on the cusp of automating a huge chunk of routine cognitive work. This frees up human brainpower for what we do best: high-level strategy, creative problem-solving, and managing the big picture.

Your Next Steps into Agentic AI

Ready to jump in? The good news is you don't need a Ph.D. in computer science to start getting your hands dirty. Here are a few practical ways to begin exploring the world of AI agents.

- Experiment with Open-Source Projects: A great starting point is Auto-GPT. It gives you a real feel for how an autonomous agent works. Just give it a goal—even a simple one—and watch it try to figure out the steps, use tools, and get the job done.

- Explore Developer Frameworks: If you're comfortable with a bit of code, the documentation for LangChain is invaluable. It’s like a LEGO set for building agents, letting you piece together the core components and understand how they interact.

- Follow Industry Leaders: Keep an eye on people like Andrej Karpathy and the big AI research labs. They often share insights and experiments that give you a peek into what's coming next, long before it becomes a mainstream product.

The key is to just start. By playing around with these tools, you'll quickly build an intuitive understanding of one of the most significant technological changes happening right now.

Frequently Asked Questions (FAQ)

1. How is an AI agent different from a chatbot like ChatGPT?

A chatbot is designed for conversation; it answers prompts. An AI agent is designed for action; it takes a goal and independently uses tools (like web browsers or APIs) to complete multi-step tasks. Think of it as the difference between asking for information and delegating a project.

2. What are the core components of an AI agent?

Every AI agent is built on four pillars: Perception (gathering data from its environment), Planning (using a large language model to reason and create a strategy), Action (using tools to execute the plan), and Memory (storing information to learn and maintain context).

3. What is the ReAct framework?

ReAct stands for Reason + Act. It's a fundamental loop that powers many agents. The agent Reasons to decide the next logical step, Acts by using a tool, and then observes the result to inform its next reason-act cycle until the goal is complete.

4. Can AI agents learn from their mistakes?

Yes, through their memory module. Short-term memory helps them track progress on a current task, while long-term memory allows them to store insights from past tasks. This helps them avoid repeating errors and become more efficient over time.

5. What is the difference between single-agent and multi-agent systems?

A single-agent system is one AI working alone on a task. A multi-agent system is a team of specialized AIs that collaborate. For example, a "research agent" could gather data, a "financial agent" could analyze it, and a "writing agent" could compile the final report. Multi-agent systems can handle much more complex problems.

6. Are AI agents safe to use with personal or company data?

This is a critical concern. Safety depends on the agent's security and the permissions you grant. Giving an agent access to sensitive data creates risks. Best practices include using agents from trusted sources, granting minimal necessary permissions (principle of least privilege), and keeping a human in the loop for oversight.

7. What kinds of tools do AI agents typically use?

Common tools include web browsers (for research), code interpreters (for data analysis and calculations), and APIs (to connect with other software like email, calendars, and databases). The agent's ability to intelligently choose and use these tools is what makes it powerful.

8. What happens when an AI agent gets stuck or encounters an error?

Simpler agents might get stuck in a loop, but more sophisticated agents can use their reasoning ability to self-correct. They can analyze the error, try alternative steps, or even attempt to debug their own approach to get back on track.

9. How much does it cost to run an AI agent?

The cost varies widely based on the complexity of the task, the underlying LLM used (e.g., GPT-4 vs. a cheaper model), and the number of actions (API calls) required. A simple task might cost pennies, while a complex, long-running task could cost hundreds of dollars. Monitoring API usage is essential.

10. Is an AI agent the same as AGI (Artificial General Intelligence)?

No. AI agents are a form of narrow AI, meaning they are designed to perform specific tasks within a digital environment, even if they do so autonomously. AGI is a hypothetical future AI with human-like intelligence capable of understanding and learning any intellectual task a human can. We are not there yet.

At Everyday Next, we're committed to breaking down complex tech topics to help you stay informed. For more guides on AI, finance, and personal growth, explore our latest articles at https://everydaynext.com.