10 Practical Natural Language Processing Examples You’ll See in 2026

Natural Language Processing (NLP) is one of the most transformative technologies of our time, quietly powering the apps and services we use daily. It's the magic behind how your phone understands voice commands, how news feeds categorize articles, and how chatbots provide instant support. The fundamental process of turning human speech into machine-readable data often begins with a high-quality audio to text converter, a core capability that enables more complex analysis.

But how does this technology actually work in the real world, and what strategic value does it create? This article moves beyond technical jargon to showcase 10 practical natural language processing examples across key industries like finance, tech, and healthcare. We will break down each application, revealing the specific business value, the typical methods used, and actionable insights you can apply.

Whether you're an investor looking for an edge, a professional aiming to boost productivity, or simply curious about the AI shaping our world, this list provides a clear, strategic look at how machines make sense of language. Forget the abstract theory; we are focusing on concrete examples that demonstrate the real-world impact and replicable strategies behind NLP.

1. Sentiment Analysis

Sentiment analysis is one of the most practical and widely used natural language processing examples today. At its core, this technology automatically identifies and classifies the emotional tone or opinion within a piece of text, determining whether the writer's attitude is positive, negative, or neutral. It's like having a digital ear that listens to the collective voice of the internet, from customer reviews and social media posts to complex financial news articles, to gauge public perception and market mood.

This process goes beyond simple keyword matching. Modern sentiment analysis models, often built with recurrent neural networks (RNNs) or transformer architectures like BERT, understand context, sarcasm, and industry-specific jargon. For investors, this means gaining a significant edge. For instance, a financial firm might analyze thousands of tweets and news headlines about Tesla to create a real-time sentiment score, helping predict potential stock volatility before it’s reflected in the price. This real-world application of AI is a powerful way to integrate technology into your decision-making processes, a concept you can explore further as you learn how to use AI in daily life.

Strategic Value and Implementation

For businesses and investors, the key is to apply sentiment analysis strategically.

- Financial Markets: Instead of just reacting to price movements, you can proactively track the sentiment shifts that often precede them. Monitoring earnings call transcripts or regulatory filings can reveal subtle changes in executive tone that signal future performance.

- Brand Management: Companies can monitor social media channels like Twitter and Reddit to instantly gauge public reaction to a product launch or marketing campaign, allowing for rapid adjustments.

- Real-Life Example: A hedge fund used sentiment analysis on social media and news reports during the GameStop saga in early 2021. By tracking the rapidly changing sentiment among retail investors on Reddit's r/wallstreetbets, they could anticipate massive short squeezes and adjust their positions, either mitigating losses or capitalizing on the volatility.

When implementing, remember to use domain-specific models. A generic sentiment tool might misinterpret financial terms like "bearish" or "volatile." For reliable insights, always cross-reference sentiment data from multiple sources to avoid being misled by isolated or manipulated opinions.

2. Named Entity Recognition (NER)

Named Entity Recognition (NER) is a fundamental task in NLP that automatically identifies and categorizes key pieces of information in text. Think of it as a smart highlighter that scans documents to find and label important "entities" like people, organizations, locations, dates, and monetary values. For investors and researchers, NER is an indispensable tool that transforms messy, unstructured financial reports and news articles into structured, actionable data, making it easier to spot trends and extract crucial facts.

This technology powers many applications, from automatically populating contact lists to sophisticated intelligence gathering. In finance, an NER model can process a press release and instantly extract the CEO's name, the quarterly revenue figure, and the company headquarters' location. Advanced models built on transformer architectures can distinguish context with high accuracy. To further explore how machines automatically identify and classify key information in text, consult this guide on Named Entity Recognition (NLP). These capabilities make NER a cornerstone among practical natural language processing examples.

Strategic Value and Implementation

For business and investment analysis, NER provides a direct path to data-driven insights from text.

- Financial Analysis: Quickly extract all monetary values and company names from thousands of documents to build a financial dataset. This is far more efficient than manual review and less prone to human error.

- Competitive Intelligence: Monitor news and corporate filings to automatically identify key executives, partnerships, and product names mentioned alongside your competitors, helping you track their strategic moves in real time.

- Real-Life Example: Healthcare providers use NER to scan unstructured clinical notes and extract key patient information like diagnoses, medications, and symptoms. This structures the data for electronic health records (EHRs), improving patient care and enabling large-scale medical research.

When implementing NER, start with pre-trained models like spaCy for general use. For specialized fields like finance, fine-tune your model on domain-specific data to correctly identify terms like "Series A funding" or complex ticker symbols. Always validate the extracted entities to ensure accuracy, as context can often be tricky.

3. Machine Translation and Language Understanding

Machine translation is a fundamental natural language processing example that automatically converts text from a source language to a target language while striving to preserve its original meaning and context. It breaks down language barriers, making information universally accessible. Modern systems like Google Translate and DeepL use sophisticated neural networks to grasp grammar, idioms, and cultural nuances, moving far beyond literal word-for-word conversions. This technology empowers global communication and research.

For an international audience, machine translation is a gateway to a world of information. An investor can instantly access financial news from Asian markets, a tech enthusiast can read European fintech articles, and a real estate professional can understand property listings from another country. This real-time access to global insights provides a significant competitive advantage, enabling users to spot emerging trends and opportunities as they happen, regardless of linguistic origin.

Strategic Value and Implementation

Strategically applying machine translation unlocks access to previously inaccessible data streams, offering a broader perspective on global markets and innovations.

- Global Market Research: Use translation tools to monitor international news outlets, regulatory websites, and social media in emerging markets. This allows you to identify investment opportunities or competitive threats long before they become mainstream.

- Cross-Border Collaboration: Instantly translate communications with international partners, clients, or teams. This accelerates project timelines and reduces misunderstandings common in multilingual business environments.

- Real-Life Example: E-commerce giant eBay uses machine translation to allow a buyer in the United States to search for products, read descriptions, and communicate with a seller in Germany, all in their native languages. This seamless cross-language experience dramatically expanded their global marketplace.

For high-stakes applications like financial analysis, use specialized translation models trained on industry-specific terminology. Always cross-reference critical data points with the original text or a human reviewer to ensure accuracy, as nuances can sometimes be lost. This approach combines the speed of AI with the precision of human oversight.

4. Text Classification and Topic Modeling

Text classification and topic modeling are foundational natural language processing examples that bring order to unstructured data. Text classification automatically assigns predefined categories to a document, like labeling an email as "urgent" or a news article as "finance." Topic modeling goes a step further by algorithmically discovering the hidden themes or topics within a large collection of texts without any predefined labels. This helps you understand and organize vast amounts of information efficiently.

For an investor or tech enthusiast, this means filtering a sea of news to find exactly what matters. Imagine a system that automatically sorts articles by sector (tech, healthcare), risk level, or relevance to your portfolio. This technology moves beyond manual sorting to provide a streamlined, intelligent content consumption experience, a core concept in modern data science. You can dive deeper into the foundational principles by exploring machine learning for beginners.

Strategic Value and Implementation

The strategic advantage lies in transforming information overload into structured intelligence.

- Content Curation: News aggregators and investment platforms use classification to deliver personalized content feeds, ensuring users see the most relevant articles first. This increases user engagement and retention.

- Operational Efficiency: Businesses can automatically route customer support tickets to the correct department or classify legal documents by type, saving countless hours of manual labor and reducing human error.

- Real-Life Example: Gmail's spam filter is a classic example of text classification. It analyzes incoming emails for features associated with spam (e.g., certain phrases, suspicious links) and automatically classifies them, moving them out of the primary inbox to protect users.

For effective implementation, start with pre-trained models like BERT and fine-tune them on your specific domain's data for higher accuracy. Using hierarchical classification can create more granular and useful categories, such as classifying an article first as "Technology" and then as "AI" or "Cybersecurity."

5. Question Answering and Chatbots

Question Answering (QA) systems and chatbots represent one of the most interactive and impactful natural language processing examples. This technology empowers computers to understand user questions posed in everyday language and provide direct, relevant answers by retrieving information from a knowledge base or generating a coherent response. It’s the engine behind virtual assistants like ChatGPT, customer service bots that resolve your issues instantly, and AI-powered tutors that explain complex financial concepts on demand.

Modern systems use sophisticated architectures like transformers to grasp context, nuance, and user intent. For a fintech company, this could mean deploying a chatbot that guides a user through investment options, explaining terms like "ETFs" and "dollar-cost averaging" conversationally. For professionals, it means getting instant, summarized answers from dense technical documents instead of spending hours searching. The rise of these systems showcases how generative AI business applications are transforming user interaction and information access.

Strategic Value and Implementation

Strategically deploying QA systems and chatbots is about delivering instant, scalable, and personalized support.

- Financial Education: An AI tutor can provide 24/7 assistance to new investors, answering questions about market trends or portfolio diversification, making financial literacy more accessible and less intimidating.

- Customer Support: Fintech apps can use chatbots to handle common queries like "how do I reset my password?" or "what are the trading fees?", freeing up human agents for more complex, high-stakes issues.

- Real-Life Example: The chatbot on the UPS website allows customers to ask "Where is my package?" in natural language. The system understands the intent, extracts the tracking number from the conversation, queries its database, and provides a real-time status update conversationally.

For effective implementation, start with a retrieval-based system trained on your own verified documents for factual accuracy, especially in finance. For a more natural user experience, blend this with generative capabilities. Always include source citations to build trust and provide a fallback to a human expert for sensitive or intricate questions.

6. Summarization and Content Condensation

Text summarization is an increasingly vital natural language processing example that automatically condenses lengthy documents into shorter, coherent summaries. This technology tackles information overload by distilling core ideas from complex texts, allowing you to grasp key insights without reading every word. It operates through two main methods: extractive summarization, which pulls key sentences directly from the source, and abstractive summarization, which generates entirely new text to capture the original's essence. For busy investors and professionals, this means quickly consuming financial reports or tech whitepapers.

Modern summarization tools, powered by models like Google's PEGASUS or OpenAI's GPT series, can process vast amounts of text in seconds. An investment research firm might use summarization to condense hours of earnings call transcripts into a few bullet points, highlighting CEO commentary on future guidance. This immediate access to crucial data can be a significant competitive advantage. Integrating these capabilities into your workflow is a hallmark of using smart AI tools for productivity, transforming how you research and analyze information.

Strategic Value and Implementation

For accelerated research and decision-making, applying summarization strategically is essential.

- Financial Analysis: Quickly generate briefs of quarterly earnings reports or lengthy analyst documents to identify key financial metrics and management outlooks, saving hours of reading time.

- Market Research: Condense long-form tech articles, industry whitepapers, and competitor press releases to stay informed about market trends without getting bogged down in details.

- Real-Life Example: The news app Artifact (and others like it) uses summarization to provide users with a bulleted list of key takeaways at the top of an article. This allows readers to quickly understand the main points before deciding whether to read the full text, saving valuable time.

When implementing, choose your method wisely. Use extractive summarization for situations requiring factual precision, like legal or regulatory documents. For a more narrative overview, abstractive summarization works well, but always cross-reference its output with the source material to verify accuracy. Adjust the summary length to fit your needs, from a single headline to a multi-paragraph brief.

7. Keyword Extraction and Information Retrieval

Keyword extraction is one of the most fundamental natural language processing examples, serving as the backbone for search and content discovery. At its core, this technology automatically identifies the most important and representative terms or phrases within a text. This allows systems to quickly grasp the main topics of a document without needing a human to read and tag it, enabling highly efficient information retrieval.

This process involves more than just finding the most frequent words. Modern algorithms like YAKE (Yet Another Keyword Extractor) or older methods like TF-IDF analyze terms based on frequency, position, and context to determine relevance. For a content platform, this means automatically tagging an article about "AI-powered trading bots" with keywords like "fintech," "algorithmic trading," and "machine learning," which helps users find relevant information. This capability is central to tools that sift through vast amounts of data, a concept explored in search-focused AI like Perplexity, which you can learn about in this comparison to ChatGPT.

Strategic Value and Implementation

For businesses and content creators, the strategic application of keyword extraction is key to visibility and user engagement.

- Content Platforms: Automatically generate tags for articles, blog posts, and reports. This improves internal search functionality and powers recommendation engines that suggest related content to keep readers engaged.

- Market Research: Analyze customer feedback, reviews, or social media posts to identify recurring themes and emerging trends. A fintech company could use this to discover that customers are frequently discussing "digital wallets" or "contactless payments."

- Real-Life Example: SEO tools like Ahrefs and SEMrush use keyword extraction to analyze top-ranking web pages. They identify the key terms and phrases that Google's algorithm deems most relevant for a given topic, helping content creators optimize their articles for better search visibility.

When implementing, it's crucial to customize the approach. Use domain-specific terminology lists to ensure financial or tech-related keywords are accurately identified. For enhanced precision, combine keyword extraction with Named Entity Recognition (NER) to distinguish between generic terms and specific entities like companies, products, or people.

8. Parsing and Syntactic Analysis

Parsing, or syntactic analysis, is a foundational natural language processing example that deconstructs the grammatical structure of a sentence. It goes beyond identifying individual words to understand the relationships between them, creating a hierarchical tree-like structure that maps out subjects, verbs, objects, and modifiers. This process is like creating a grammatical blueprint of a sentence, allowing a machine to comprehend complex ideas, questions, and statements with much greater accuracy.

While more technical than some applications, parsing is the engine behind many sophisticated AI systems. For instance, an advanced question-answering system relies on parsing to understand that "Who acquired the tech startup?" is asking for the agent of an action, not the object. Similarly, financial tools use it to extract precise relationships from dense legal contracts or earnings reports, identifying which company acquired another and for how much. This deep structural understanding is crucial for building systems that can genuinely comprehend, not just recognize, language.

Strategic Value and Implementation

For developers and businesses, leveraging syntactic analysis unlocks a more nuanced level of data extraction and system intelligence.

- Information Extraction: Instead of just finding keywords, you can extract specific relationships from documents. For example, parsing a news release to automatically identify which executive made a specific forward-looking statement and what the conditions were.

- Advanced Chatbots: Enhance chatbot capabilities by enabling them to understand complex, multi-part user queries. A system can parse "Book me a flight to New York and find a hotel near Central Park" into two distinct commands with their respective parameters.

- Real-Life Example: Grammar-checking tools like Grammarly use parsing to analyze the grammatical structure of your sentences. It identifies subjects, verbs, and clauses to detect errors like subject-verb disagreement, incorrect punctuation, or dangling modifiers, and then suggests corrections.

When implementing, use powerful, pre-trained parsers from libraries like spaCy or NLTK rather than building one from scratch. For maximum impact, combine parsing with Named Entity Recognition (NER) to first identify the key entities (people, companies) and then use parsing to understand exactly how they relate to each other within the text.

9. Relationship Extraction and Knowledge Graphs

Relationship extraction is a sophisticated natural language processing example that identifies and classifies connections between entities in text. It moves beyond just finding names or places, instead pinpointing how they relate to each other, such as "Company X acquired Company Y" or "Person A is the CEO of Organization B." This technology automatically transforms unstructured sentences into structured data, often visualized as a knowledge graph, which maps out these complex networks of information.

For investors and business analysts, this capability is like creating a dynamic, real-time map of an entire industry. It uncovers hidden patterns and opportunities by connecting disparate pieces of information. For example, by analyzing thousands of press releases, you could automatically track which venture capital firms are consistently investing in successful AI startups, revealing valuable co-investment opportunities or emerging market trends. This is one of the more advanced applications of NLP, offering a powerful strategic advantage.

Strategic Value and Implementation

The true power of relationship extraction lies in making complex, interconnected data understandable and actionable.

- Market Intelligence: Instead of manually reading hundreds of articles to track executive movements, you can build a knowledge graph that visualizes career paths. This can signal which companies are winning the talent war or where a key executive's departure might create instability.

- Supply Chain Analysis: Businesses can analyze news and financial reports to map out supplier and partner relationships across their industry, identifying potential risks or dependencies that are not immediately obvious.

- Real-Life Example: Google's Knowledge Graph uses relationship extraction to power the information boxes (Knowledge Panels) you see on the right side of search results. When you search for "Elon Musk," it doesn't just show you web pages; it shows structured information like his date of birth, companies he founded (Tesla, SpaceX), and his connection to them (CEO).

To implement this effectively, start with well-defined relationship types relevant to your field, like "invested in" or "partnered with." Use a combination of named entity recognition to find the entities and then apply a relationship extraction model to classify their connections. For the most accurate insights, always validate the extracted relationships against reliable sources.

10. Semantic Search and Vector Embeddings

Semantic search goes beyond simple keyword matching to understand the user's intent and the contextual meaning of a query. Instead of just finding pages with the exact words you typed, it uses vector embeddings, which are mathematical representations of text, to find content that is conceptually similar. This technology, powered by models like BERT or OpenAI's APIs, allows a search engine to grasp that a query for "digital money" is closely related to articles about "cryptocurrency" or "blockchain," even if the original search terms aren't present.

This method fundamentally changes how users discover information, creating a more intuitive and relevant experience. For content platforms, it means users can find articles about related ideas, such as a search for "passive income" surfacing content about dividend stocks and real estate investing. This is one of the most powerful natural language processing examples for improving content discovery and building a smarter, more helpful recommendation system that anticipates user needs and connects disparate but related topics.

Strategic Value and Implementation

For businesses with large content libraries, the key is to implement semantic search to boost engagement and user satisfaction.

- Content Discovery: Help users find relevant information they didn't even know to search for. A parent reading about screen time could be recommended articles about managing technology use or digital well-being.

- Knowledge Management: Internal company wikis and databases become far more useful when employees can search by concept rather than needing to know exact document titles or keywords.

- Real-Life Example: Pinterest uses semantic search (called "visual discovery") to recommend pins. If you save a pin of a modern leather armchair, its system doesn't just find other identical armchairs. It understands the concepts—"modern design," "leather furniture," "living room ideas"—and recommends pins for complementary items like minimalist coffee tables or industrial-style lamps.

For implementation, leverage pre-trained models from OpenAI or Cohere for a fast start. To scale, use specialized vector databases like Pinecone or Weaviate, which are designed to handle millions of embeddings efficiently. For maximum relevance, fine-tune the models on your specific domain's content.

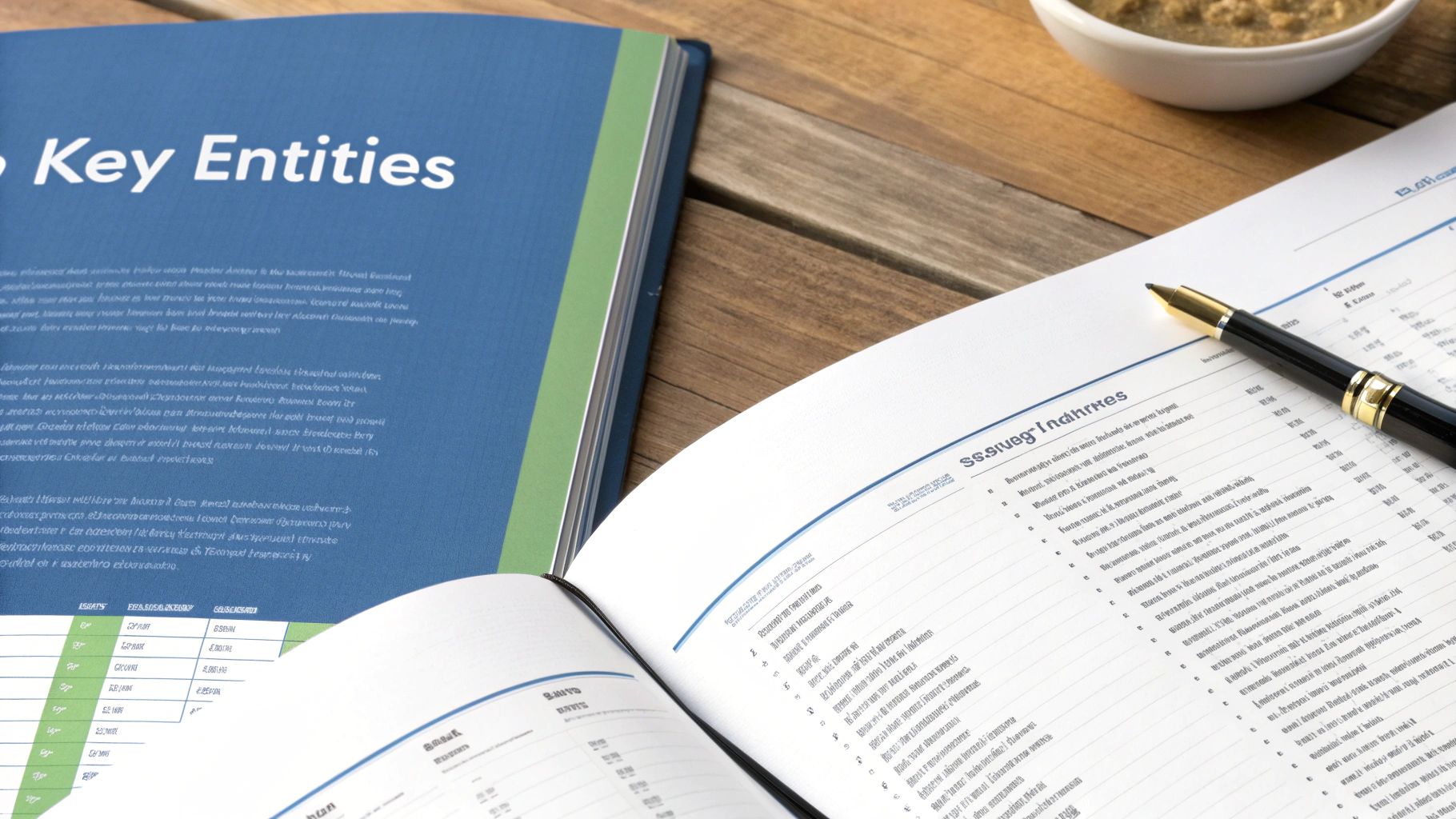

NLP Techniques: A Comparative Overview

To help you choose the right tool for the job, this table compares the ten NLP examples based on their complexity, resource needs, and ideal applications.

| Technique | Implementation Complexity 🔄 | Resource & Speed ⚡ | Expected Outcomes 📊 | Key Advantages ⭐ | Ideal Use Cases & Tips 💡 |

|---|---|---|---|---|---|

| Sentiment Analysis | Moderate — needs labeled data & domain tuning. | Low–Medium — efficient; real-time feasible. | Polarity scores (positive/negative), trend signals, alerts. | Fast market/brand sentiment snapshots; scalable. | Monitor social/news; combine with technical indicators; watch for sarcasm. |

| Named Entity Recognition (NER) | Moderate — pretrained + fine‑tuning for domain terms. | Low–Medium — light inference cost. | Structured entities (names, tickers, amounts, dates). | Automates data extraction; improves search and data capture. | Extract company/ticker info from reports; validate against authoritative sources. |

| Machine Translation | High — model selection + domain adaptation is complex. | High — GPUs for training; cloud real‑time viable. | Cross‑language content access (nuance can vary). | Breaks language barriers; enables global information access. | Use financial/legal translation models; always have human review for critical decisions. |

| Text Classification | Moderate–High — requires quality labeled data or careful unsupervised tuning. | Medium — scales well but needs training data. | Categorized documents; discovered topics. | Organizes content; enables personalization at scale. | Fine‑tune pre-trained models on your data; update labels regularly to avoid drift. |

| Chatbots & QA | High — involves retrieval, generation, and context management. | High — large models and vector DBs; real‑time costs can be high. | Conversational answers, interactive user support. | Provides instant user assistance; highly scalable engagement. | Use retrieval-augmented generation (RAG) for factual accuracy; include human fallback and citations. |

| Summarization | Moderate–High — extractive is simpler, abstractive is complex. | Medium — moderate compute; near‑real‑time possible. | Shortened briefs (extractive/abstractive) preserving key points. | Saves significant reading time; highlights actionable insights. | Prefer extractive for factual accuracy (legal/financial); verify abstractive summaries with the source. |

| Keyword Extraction | Low–Moderate — many effective unsupervised options available. | Low — lightweight and very fast. | Ranked keywords, tags, topic cues. | Improves search & content tagging; highly efficient. | Combine multiple algorithms for robustness; use domain term lists for finance/tech. |

| Parsing | High — requires deep linguistic models and complex interpretation. | Medium–High — can be computationally heavy at large scale. | Parse trees, dependency relations for deep analysis. | Foundation for complex NLP tasks like QA and relation extraction. | Use pre‑trained parsers (e.g., spaCy); pair with NER for richer, structured extraction. |

| Relationship Extraction | High — relation modeling + graph construction is advanced. | High — significant data, compute, and storage required. | Structured relationship maps and knowledge graphs. | Reveals hidden business connections and industry patterns. | Start with a few focused relation types (e.g., "acquired by"); validate against trusted sources. |

| Semantic Search | Moderate–High — requires embedding models + vector infrastructure. | High — requires GPUs for embeddings + a vector DB; ongoing updates needed. | Conceptually relevant results, improved recommendations. | Finds related content beyond exact keywords; boosts discovery. | Use pre-trained embeddings to start; fine-tune on your domain content for best results. |

Putting NLP to Work: Key Takeaways for Everyday Innovators

From parsing financial reports to summarizing medical research, the natural language processing examples we've explored reveal a powerful truth: NLP is no longer an abstract academic field. It is a practical, accessible toolkit that is actively reshaping industries, creating efficiencies, and unlocking new forms of value for professionals, investors, and lifelong learners. The journey from raw text to actionable insight is now faster and more scalable than ever before.

We've seen how sentiment analysis can provide a real-time pulse on market trends, how named entity recognition can structure chaotic information for quick analysis, and how semantic search connects concepts in ways simple keyword matching never could. Each example, from chatbots in customer service to topic modeling in marketing, demonstrates a shift from manual, time-consuming labor to automated, intelligent systems that augment human capability.

Your Strategic NLP Blueprint

The key takeaway is not just to be aware of these tools but to understand the strategic thinking behind their application. Innovation begins with identifying a point of friction, a repetitive task, or a question that was previously too complex to answer.

- For Investors & Professionals: Think about the hours spent reading through documents. Could automated summarization or relationship extraction give you a competitive edge by surfacing critical connections faster?

- For Entrepreneurs: Consider your customer feedback channels. Could text classification and sentiment analysis automatically categorize feedback, route issues, and identify emerging trends before they become major problems?

- For Everyday Learners: How do you manage information overload? Could keyword extraction and semantic search help you build a personalized knowledge base that connects ideas from articles, books, and notes in a more intuitive way?

The real power of these natural language processing examples lies in their replicability. The models and methods used by large corporations are increasingly accessible through open-source libraries like spaCy and Hugging Face Transformers or user-friendly APIs from major cloud providers. The barrier to entry for experimenting with and implementing NLP has never been lower.

Moving from Theory to Action

Mastering these concepts is about more than just technological literacy; it's about developing a new problem-solving lens. When you encounter a challenge involving large amounts of text, you can now ask, "Is there an NLP approach that could solve this more effectively?" This mindset is what separates passive observers from active innovators. By understanding the 'how' and 'why' behind these applications, you are equipping yourself to not only navigate the future of technology but to help build it. The next wave of breakthroughs will come from those who can creatively combine these tools to solve unique, real-world problems.

Frequently Asked Questions about Natural Language Processing

1. What is Natural Language Processing (NLP) in simple terms?

In simple terms, NLP is a field of artificial intelligence (AI) that enables computers to understand, interpret, and generate human language—both text and speech. Think of it as teaching a machine to read, listen, and write like a person.

2. What's the difference between NLP and NLU?

NLP (Natural Language Processing) is the broader field covering all aspects of machine-human language interaction. NLU (Natural Language Understanding) is a subfield of NLP focused specifically on comprehension—determining the intent and meaning behind the text, dealing with ambiguity and context.

3. How does NLP work?

NLP works by breaking down human language into smaller pieces (a process called tokenization), analyzing the grammatical structure (parsing), identifying key entities (NER), and then using machine learning models to determine the context and meaning. Modern NLP heavily relies on deep learning models like transformers to understand complex patterns.

4. What are the biggest challenges in NLP?

The biggest challenges in NLP include handling ambiguity (words with multiple meanings), understanding sarcasm and irony, dealing with grammatical errors in user-generated text, and managing the context of a long conversation.

5. Is Siri or Alexa an example of NLP?

Yes, Siri, Alexa, and Google Assistant are prime examples of NLP in action. They use speech recognition to convert your voice to text, NLU to understand your command or question, and then natural language generation (NLG) to provide a spoken response.

6. Can I use NLP without being a programmer?

Absolutely. Many modern AI tools and platforms offer no-code or low-code interfaces for NLP tasks. You can use services like Google's AutoML, MonkeyLearn, or even advanced features in tools like Zapier to perform text classification, sentiment analysis, and keyword extraction without writing code.

7. What is the difference between extractive and abstractive summarization?

Extractive summarization works by identifying and pulling the most important sentences directly from the original text to form a summary. Abstractive summarization goes a step further by generating entirely new sentences that capture the core meaning of the original text, much like a human would.

8. What are "transformers" in the context of NLP?

Transformers are a type of deep learning architecture that has revolutionized NLP. Introduced in the 2017 paper "Attention Is All You Need," they are exceptionally good at handling context and dependencies in text, which is why models like BERT and GPT (Generative Pre-trained Transformer) are so powerful.

9. How is NLP used in finance?

In finance, NLP is used for algorithmic trading based on sentiment analysis of news, automating the extraction of data from financial reports (NER), powering chatbots for customer service, and ensuring compliance by analyzing legal documents and communications.

10. What is the future of NLP?

The future of NLP is moving towards more sophisticated, multi-modal models that can understand text, images, and audio simultaneously. We can also expect more personalized and context-aware AI assistants, improved human-machine collaboration, and the ability to handle even more nuanced and complex language tasks with near-human accuracy.

The world of AI and NLP is evolving at an incredible pace. To stay ahead of the curve with deep-dive analyses, practical guides, and strategic insights into the technologies shaping our future, subscribe to Everyday Next. We provide the essential context you need to turn complex topics like these natural language processing examples into your next competitive advantage. Join the community at Everyday Next and start building your future today.